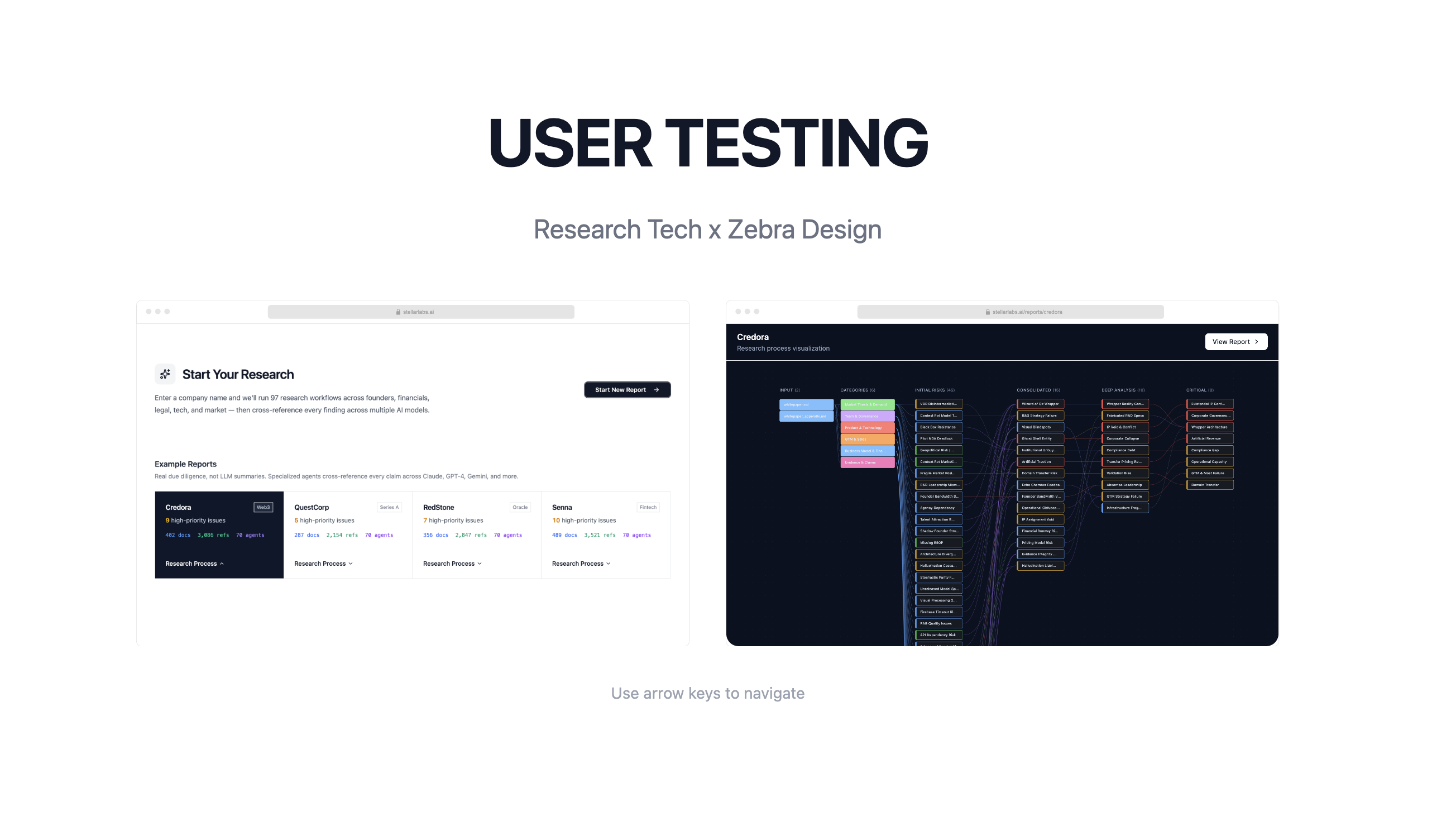

Research Tech

Zebra Design Sprint Progress

Design Files & Links

Documents

Deliverables

- Coded Prototype — React SPA across all 3 sprints

- Design System — Visual Style Brief

- User Testing Reports — Sprint 2 + Sprint 3

- Presentations — Sprint 1, 2, and 3

- Miro Board — 3 workshop canvases

- Workshop Materials — Transcripts + plans

- User Testing Data — 9 sessions (Fireflies transcripts + written notes)

- Example Reports — Stellar Labs + Credora

- Video Walkthroughs — 5 Screen.studio recordings

Updates

Sprint 3 is complete. All 7 build priorities delivered, all 7 design decisions validated through user testing.

The product works. The remaining gap is depth features for power users — not foundational fixes.

Results (9 users, 2 sprints):

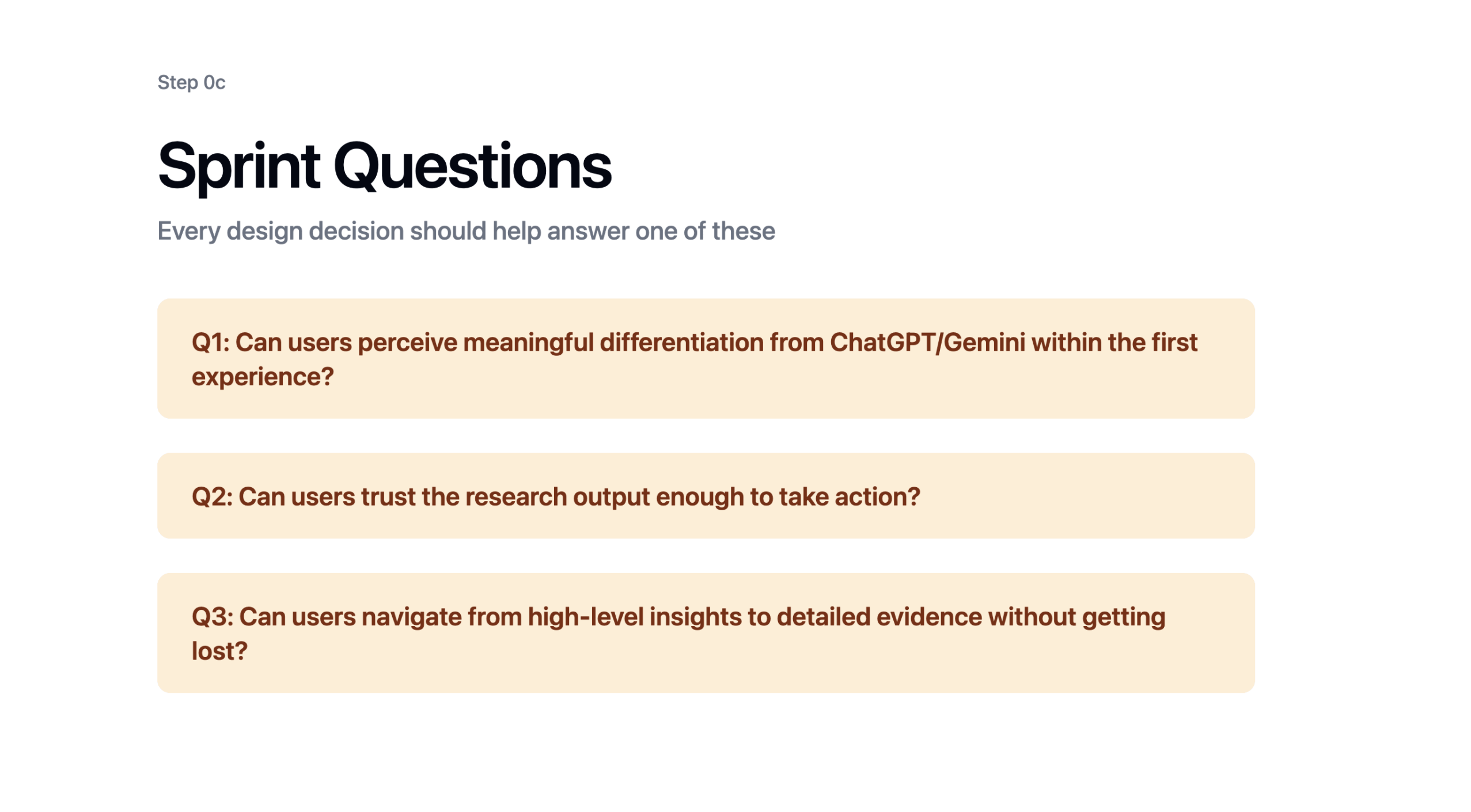

- Differentiation from ChatGPT/Gemini: 90% (4.5/5 ICP score) — every target user perceives meaningful differentiation

- Trust in research output: 80% (4/5) — all ICP users achieved Level 1 Trust (source visibility). Level 2 Trust (process transparency) is the upgrade path

- Navigation from insights to evidence: 100% (5/5) — solved and maintained across both sprints

What changed since Sprint 2:

- Session times halved (~60 min to ~25 min)

- User feedback matured from "What is this?" to "I want more depth"

- Evidence discovery improved: 20% to 50% of users found sources independently

Every change traces directly to user testing feedback.

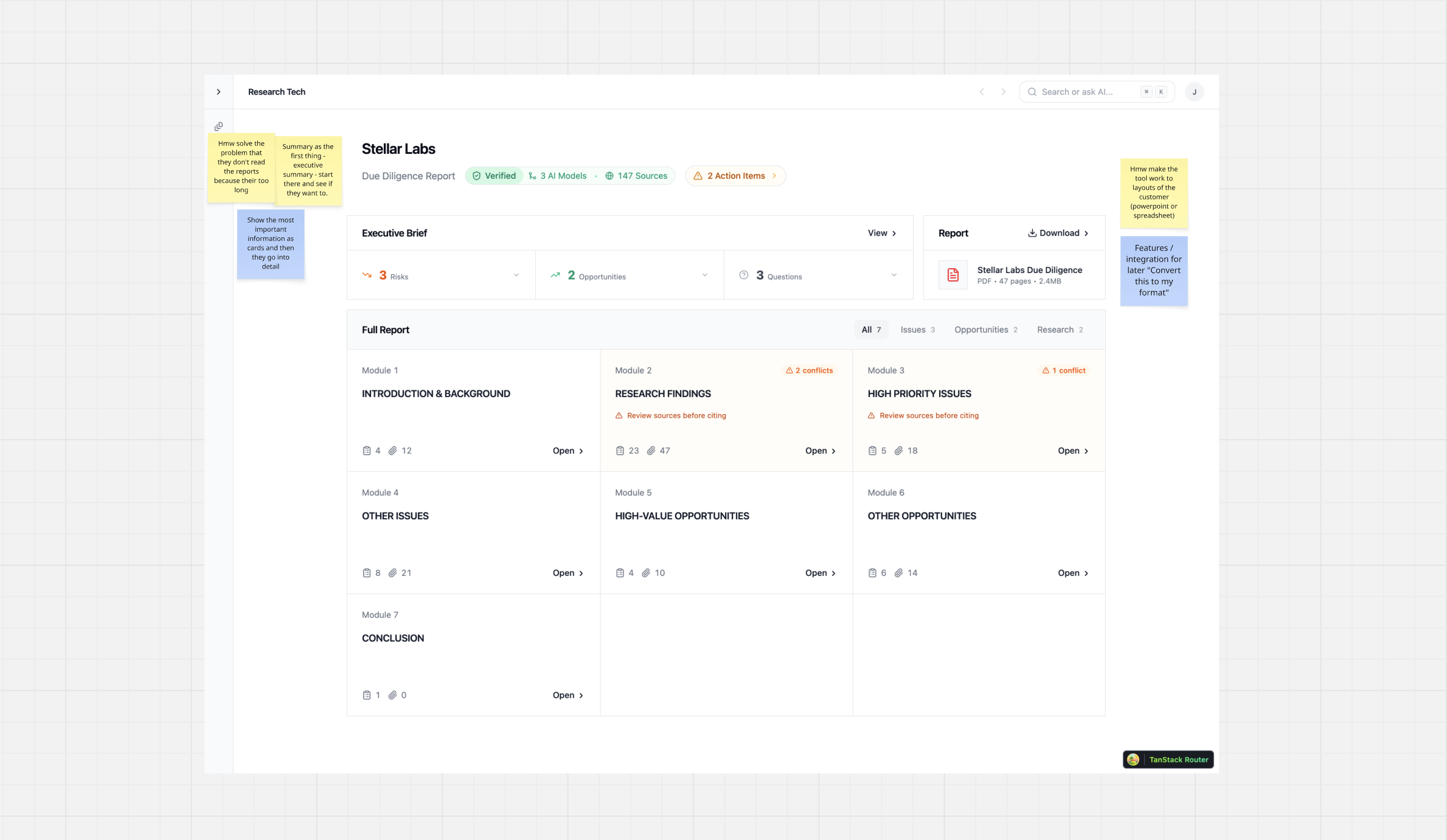

Sources & Trust Redesign — 5/9 users preferred direct source access over chat. Sources now appear first, with confidence levels (High/Medium/Low) and a rejected sources section showing what was checked and excluded.

"Sources. This is really good." — Jeremy Osborne

Report Categories Restructured — Switched from signal-type grouping (Risks, Opportunities, Questions) to domain-based structure (Team, Market, Technical) with signal types as inline tags. Matches how investors think about due diligence.

"I've done the due diligence, I've highlighted the things I wanted to know about the team, technical aspects, market — and I don't see anything related to it." — Piotr Kondrat

Research Nodes Expanded — Technical users gravitated to exploring how conclusions were reached. Made the DAG full-bleed (86 nodes), added category filtering, hover preview, priority sorting, and simplified colors (9 to 3 states).

"I wanted to go back to that main side… I wanted to explore the nodes more." — Jeremy Osborne

DAG Interactive Navigation — Click any category node to filter the full 86-node graph. Hover to preview before committing. Critical issues sorted to top.

"If I click categories — I want the graph to update to give me just this category." — Kay

16 Quick Fixes: Card spacing, processing time context, multiline chat input, navigation improvements, verification messaging, mobile optimizations — all directly from user feedback.

Final round of testing complete with 4 users (Jeremy Osborne, Piotr Kondrat, Pola, Kay). Combined with Sprint 2's 5 sessions, we now have 9 total user testing sessions across the project.

Key discovery — Two Levels of Trust:

- Level 1: "I can see the sources, I know where claims come from" — achieved for all 5 ICP users

- Level 2: "I can see the research process, what was checked, what was rejected, what has high/low confidence" — requested by the 2 most sophisticated users

This finding directly shaped the sources overhaul and defines the next development phase.

Lots of planning for the key changes to perfect the user experience — new dashboard, sharing and report entry point, and the re-worked setup flow. Started building but I'll polish the UX before sharing.

Scope addition: Simple landing page added to Sprint 3.

- Hero section with CTA

- Brief description + screenshot/demo

- Cal.com booking integration

Report-first entry, flexible setup flow, and category-based report view.

4 interviews complete. 1 more with Maciej Frankowicz tomorrow.

Next: Analyse research, make quick UX fixes, present results in workshop.

Workshop: Moved from Wednesday to Thursday (project pushed one day).

Final walkthrough of the prototype ahead of Monday's user testing sessions.

Watch Sprint Walkthrough

Opens in Screen.studio

Polishing the other screens and details from the last workshop tomorrow. Ready for user testing on Monday.

Watch Sprint Walkthrough

Opens in Screen.studio

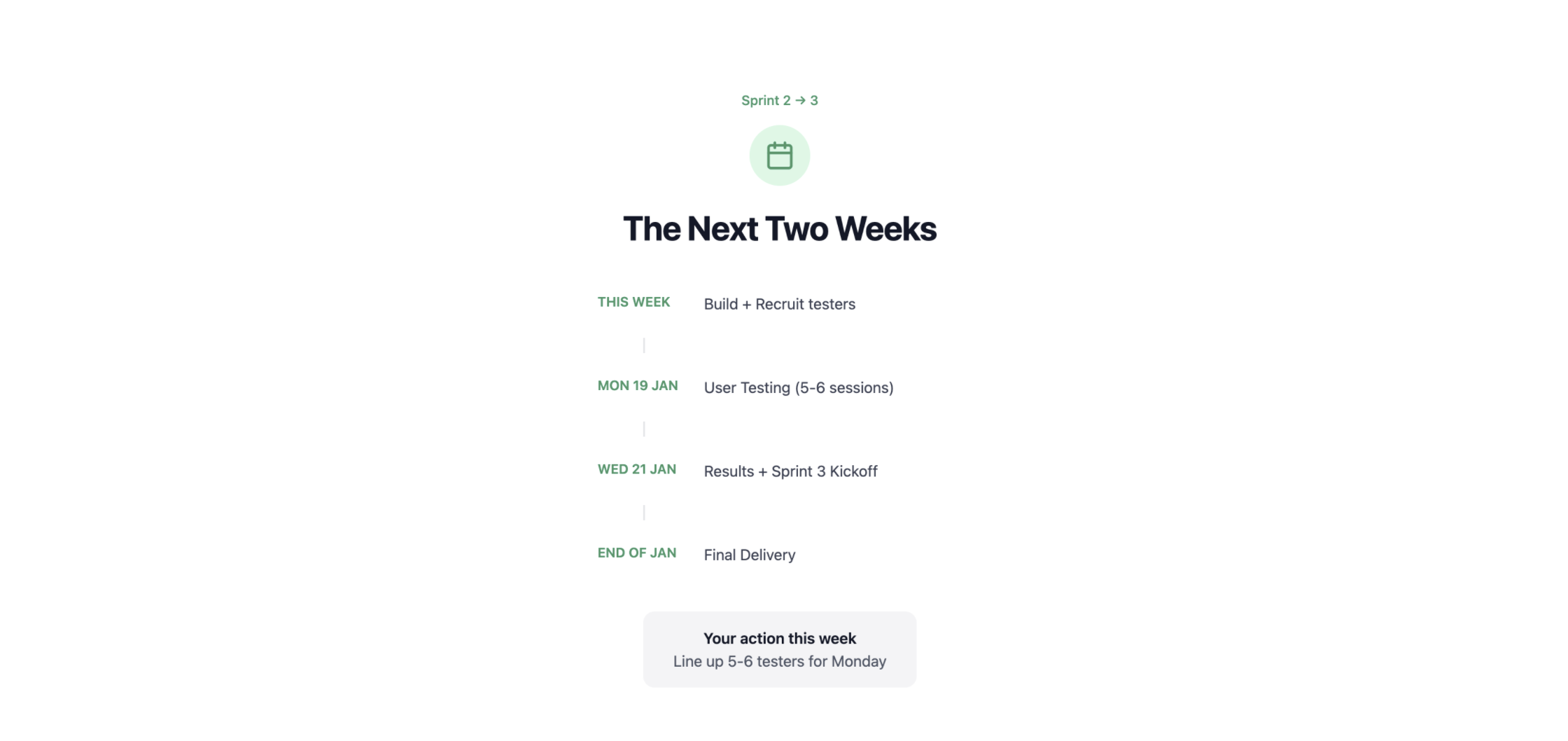

Sprint 2 schedule and Sprint 3 preview. User testing moved to Monday 19th to allow proper build time.

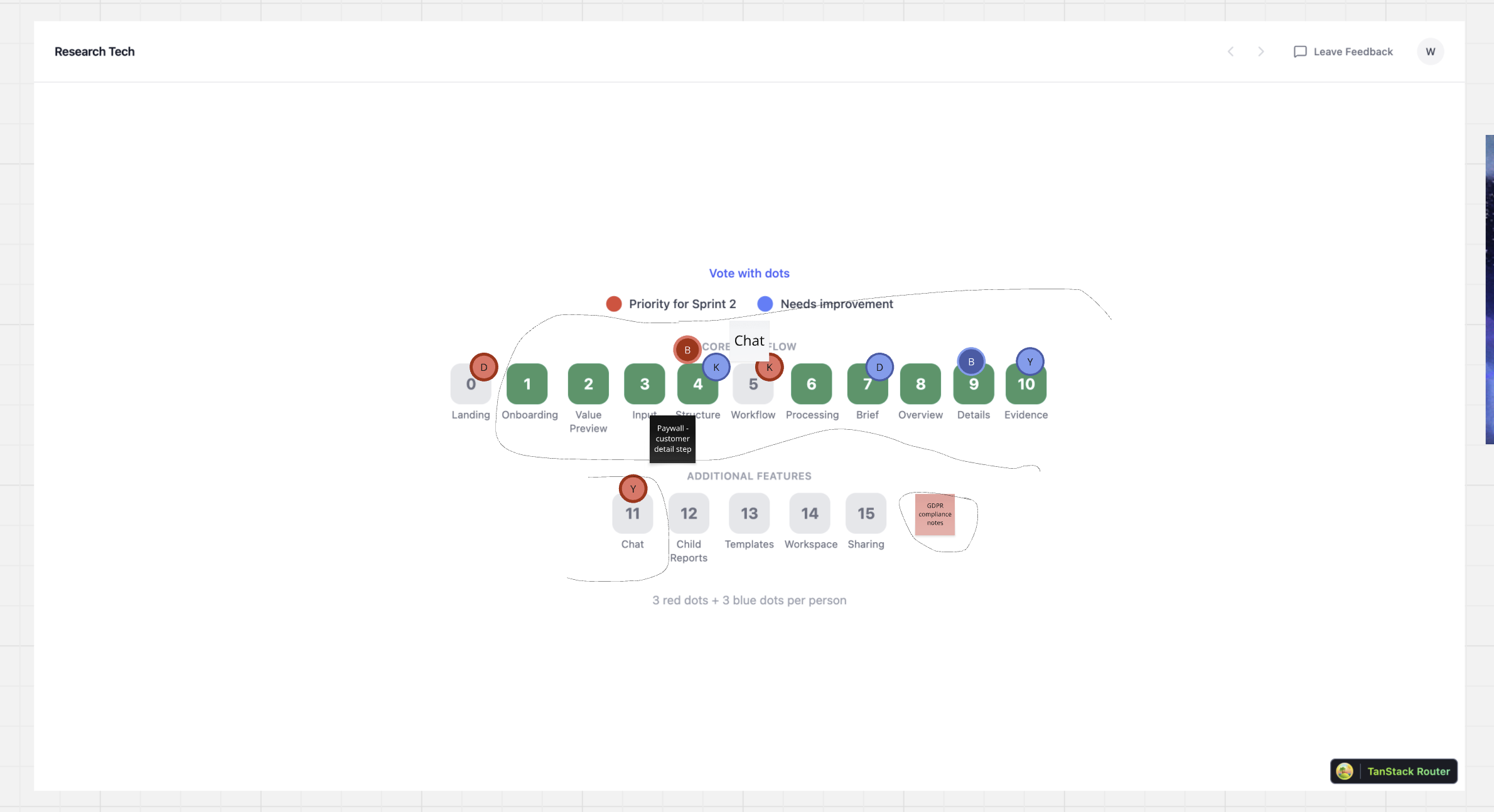

Workshop complete! We used a supervote process to prioritize Sprint 2 focus areas.

- Workflow/Chat is the main build priority — a chat and workflow-like screen to better onboard clients

- Report Review Adaptations — many refinements captured based on team feedback

- Timeline agreed — user testing moved to Monday 19th to allow proper build time

View Full Miro Board

View Full Miro BoardThis page shows what we set out to answer and what's in the prototype. Hit "Start Prototype" to click through.

Watch Sprint Walkthrough

Opens in Screen.studio

Created from inputs from the Research Tech team.

Resources provided by the Research Tech team.

First workshop outcomes and updated sprint plan.

Sent to all workshop participants with details about what to expect during the sprints.

Read full email →Calendar invites sent for all three sprint workshops at 11:00 AM CET.

Sprint 1 Workshop

Monday, Jan 5, 2026 · 11:00 AM CET · 3 hours

Map the onboarding and/or "Chat with your Diligence" flow

Sprint 2 Workshop

Monday, Jan 12, 2026 · 11:00 AM CET · 3 hours

Sprint 3 Workshop

Monday, Jan 19, 2026 · 11:00 AM CET · 3 hours

Project Overview

Sign up → Create project → First report insights. Authentication flow, project creation with progress indication, first report generation, and responsive design across devices.

Workflow screen for better client onboarding + Report detail refinements. Based on workshop voting, Sprint 2 focuses on:

Priority 1: Workflow (Step 5) — Chat-driven research selection with DAG visualization, giving users control over their diligence pipeline

Priority 2: Report Details — Refinements to Structure Preview, Brief, Details, and Evidence screens based on team feedback

User testing: Monday 19th with 5-6 target users

Report-first entry, simplified setup flow, category-based report structure, source trust redesign, research node expansion. 4 major overhauls and 16 quick fixes built from 9 user testing sessions across 2 sprints. ICP-weighted results: 90% differentiation, 80% trust, 100% navigation.

Phase 1 — Ship (front-end ready, backend connects)

Source API integration, confidence scoring, rejected source data, domain-based report categories

Phase 2 — Iterate (team decisions + scoping)

Cross-reference tracking, review step toggle, report taxonomy refinement

Phase 3 — Expand (next sprint / enterprise)

Living document + Kanban, team collaboration, enterprise validation

All 3 sprints complete. 9 user testing sessions across 2 sprints. Final delivery January 31, 2026.